News

The CORE news hub

Middleware Development: Building a Unified Data Platform

Author: Maria Tassi, Nikos Gkevrekis

3rd July 2025

The CORE Innovation Centre technical team has released the alpha version of middleware platform that provides a unified solution for managing heterogeneous data flows across different data sources.

It is a flexible and scalable platform, which offers a robust foundation for seamless data ingestion, storage, processing, and secure access across diverse systems and demonstration sites. The development of this middleware platform is in line with CORE’s digital transformation mission, helping organisations accelerate their transition through cutting-edge research and technology development which addresses real-world barriers that hinder progress for many manufacturers, regardless their specific industry.

Architecture Overview

The middleware is structured around a layered architecture (see the figure below), which consists of four primary layers – Ingestion, Storage, Processing, and Consumption – all supported by Orchestration and Monitoring layers. These interconnected components ensure that the platform can handle a wide spectrum of data types while maintaining operational coherence and traceability.

Middleware architecture

Key features of the architecture

Multi-Source Data Ingestion: Designed to integrate heterogeneous data streams, the ingestion layer supports:

MQTT for real-time data

REST API for batched real time and historical data

File uploads (e.g. images, GIS) through a fileserver

It also supports ETL – Extract, Transform, Load – processes and performs data validation on entry to maintain data quality and consistency.

Versatile Storage: The storage layer is optimized for various data types:

large files

structured data

time-series data

Features like pagination and sorting enhance performance, especially for large-scale datasets.

Secure and Dynamic Data Access: The consumption layer exposes data via RESTful APIs, featuring:

Token-based authentication

Role-based authorisation

This way, users can query real time, historical records and batched data that we ingested from real time sources, specify time ranges, and retrieve files in original or compressed formats. The system also supports dynamic endpoints tailored to specific organizations or devices.

Interoperability and Integration: The platform is built to work across multiple sources and demonstration sites

Scalability and Extensibility: As an alpha release, the architecture anticipates future enhancements including real-time processing, advanced analytics modules, and tighter integration with external systems, supporting the evolving needs of diverse pilot sites.

Ingestion Layer

The development process began with the ingestion layer, which serves as the gateway for all incoming data. Designed with flexibility, this layer can receive data from real-time sources such as MQTT, scheduled or historical data via APIs, and large files like images and geospatial datasets through a fileserver. This fileserver was developed to support large document handling, enabling users to upload, download, and manage files in their original formats, in order to accommodate diverse data requirements from real-time data to large-scale datasets. In addition to managing data intake, the ingestion layer plays a key role in validating incoming information and preparing it for further use. It supports ETL operations, which ensure that data is harmonised, transformed when necessary, and made ready for further analysis or storage.

Storage Layer

In parallel with the ingestion layer, significant progress was made on the storage layer. This layer is designed to efficiently store the wide variety of data collected by the system. It integrates multiple storage technologies like S3 buckets, PostgreSQL, TimescaleDB etc. for general data storage, for handling files from the fileserver, and for managing time-series data ensuring optimal performance and scalability.

Consumption Layer

Development has also begun on the consumption layer, which is responsible for enabling secure access to the stored data. This layer currently provides REST API that are protected by token-based authentication and role-based authorization, ensuring that only authorized users can access sensitive information. Users can query bached real time data, historical data by requesting specific time ranges, and retrieve files either in their original form or in compressed formats and define pagination and sorting which enhance the speed and efficiency of data retrieval. Additionally, the consumption layer supports dynamic endpoint creation based on organizational structures or specific device IDs, allowing it to adapt easily to the varying needs of different demo sites and stakeholders.

Responding to real-life challenges

The system's complexity presented various challenges during development. Managing a variety of input formats, including real-time IoT data, historical API feeds, and large unstructured files, required the development of a flexible and adaptable ingestion system which can process heterogenous types of data. Ensuring data quality across many formats and sources necessitated the development of robust ETL methods as well as versatile and dynamic schema validations.

Another challenge was securyity, as designing a secure system with token-based authentication and role-based authorisation presented difficulties in multi-site, multi-user scenarios. To balance flexibility with system performance, especially for large-scale time-series data and file management, storage solutions have to be carefully selected and configured.

Furthermore, developing and maintaining dynamic endpoints able to consume data as they are being ingested required a careful and complicated database schema and management. Last but not least, deploying, managing and scaling numerous different data ingestion and consumption services requires the development and usage of complex custom orchestrating and monitoring tools.

Conclusions

The release of alpha version of the middleware platform marks a significant step toward a flexible and robust solution for managing heterogeneous data. With its layered architecture, it supports seamless data ingestion from real-time data flows, APIs, and large files, while ensuring efficient storage, validation, and secure access. Features such as ETL processing, dynamic endpoints, and multilevel authentication enable adaptability, interoperability, and data integrity across diverse sources.

The middleware platform has a substantial market impact, because it enables interoperable data exchange across several sources, boosting collaboration in various fields such as manufacturing, climate resilience, and industrial processes. This interoperability accelerates digital transformation by combining real-time, historical, and large-format data to create a secure, scalable infrastructure that improves decision-making and operational efficiency.

Designed to manage complex, multi-site systems, it provides dynamic endpoints and role-based access while establishing the groundwork for future features such as real-time analytics and AI integration.

Elements of a secure and interoperable middleware approach have been explored and developed within two of our Horizon EU projects; CARDIMED, which focuses on boosting Mediterranean climate resilience, and MASTERMINE, which focuses on building a digitalized copy of real-world mines through an Industrial Metaverse approach.

The newly-released middleware platform is aligned with our CORE mission of accelerating digital transformation through cutting-edge research and technology development, especially in data interoperability, artificial intelligence, and industrial digitization and demonstrates our dedication to developing smart, adaptive, and future-ready solutions that address real-world difficulties across industries.

The alpha version lays a strong foundation for future enhancements, including advanced analytics, real-time processing, and broader system integration, positioning the middleware as a key enabler in modern data ecosystems.

Data-Driven Digital Shadows for Process Manufacturing

Author: Iason Tzanetatos

26th June 2025

Circular TwAIn is a Horizon EU project which aims to research, develop, validate, and exploit a novel AI platform for circular manufacturing value chains. This AI platform can support the development of interoperable circular twins for end-to-end sustainability.

Within the scope of our activities in the Circular TwAIn project, CORE IC is responsible for the design, development and deployment of a data-driven Digital Shadow for an industrial process, such as that of a petrochemical plant (the Circular TwAIN end user, SOCAR).

In process manufacturing, particularly in complex and highly regulated industries like petrochemicals, the ability to monitor, analyse, and optimise operations in real time is essential for maintaining efficiency and competitiveness. The integration of advanced digital technologies is reshaping traditional operations, enhancing performance, and driving innovation. To be able to realise this model, we have utilised historical operational data from our partners at SOCAR, that they have kindly shared with the consortium.

Architectural Design of Process Digital Shadow

Working with our partners at TEKNOPAR and SOCAR, we defined their requirements from the technology and identified the following key objectives:

1. Perform anomaly detection on real-time sensorial data, that depict the current conditions of the plant

2. Predict what the sensor readings will be in a 10-minute horizon (i.e., predict short-term future state of the plant)

3. Perform anomaly detection on the forecasts of the plant.

We started the development of a Digital Shadow for a manufacturing process by identifying the involved assets of the production line. Since we are dealing with a process where the involved assets interact with one another, the outputs of some of the assets are considered as inputs to other assets down the line.

After the involved assets and their interactions had been mapped, we moved to the mapping of the sensorial inputs/outputs of each asset. Relevant information such as SCADA schemes were used to determine the sensors that are considered as inputs and outputs of each machine.

Data Augmentation Techniques – Asphalt Use case

To successfully identify any anomalous conditions for each sensorial signal, irrespective of the input/output characterization of the involved sensors, we monitored each signal individually.

We proceeded with training an Autoencoder-like Deep Learning model, to identify anomalous conditions on a per sensor level, meaning that the model examines the data point of each sensor individually.

Autoencoder Neural Network Architecture

As depicted in the figure above, an Autoencoder model comprises three main components:

1. The Encoder, where the input information is compressed

2. The Bottleneck layer, where a compressed low dimensional representation of the input is determined by the model

3. The Decoder, which reconstructs the input relying only on information retrieved by the Bottleneck layer

By training an Autoencoder model with high quality, normally characterised operational data, we essentially have a model that can identify significant deviations on the operations of the involved assets.

However, since this use-case is particularly complex, niche models such as Reservoir Computing deep learning models. This family of models is best suited for time series data with complex patterns, similarly to the operational data of a manufacturing process.

From: Quantum reservoir computing implementation on coherently coupled quantum oscillators

By adopting the approach of the Autoencoder model, we formulate the problem in the exact manner, only we swap models and use a Reservoir Computing model.

Forecasting

To proceed with forecasting 10-minutes ahead of the manufacturing process, our model needs to follow the same interactions of the assets as in the actual plant.

Production flow on Petrochemical line

The process comprises three main components, a Reactor, an Absorber and a Stripper. Each asset interacts back and forth with each other, and these interactions must be replicated in the digital realm as well.

A Digital Shadow has been developed for each physical asset. We utilised the same family of Deep Learning models to perform forecasting, by switching the objective of the models. As a final step, we connected each model by following the physical domain, as previously mentioned.

Through working with our partners, our technical team managed to successfully implement a Process Digital Shadow that achieves real-time anomaly detection, forecasting 10 minutes ahead, and identifies forecasted data points as normal or abnormal, offering the end-user an early warning system.

The s-X-AIPI project has concluded

Author: Vassia Lazaraki, Athanasia Sakavara, Nikos Makris, Clio Drimala

24th June 2025

The s-X-AIPI project aspired to transform the EU process and manufacturing industries by developing an innovative, open-source toolset of trustworthy self-X AI technologies. These AI systems are designed to operate with minimal human intervention, continuously self-improve to boost agility, resilience and sustainability throughout the product and process lifecycle.

s-X-AIPI supports industrial workers with smarter, faster decision-making, while promotes integration into a circular manufacturing economy. Utilising innovative AI tools enhances design, development, operation and monitoring of plants, products and value chains.

s-X-AIPI demonstrated in four industrial sectors: Asphalt, Steel, Aluminum, and Pharmaceuticals, showcasing a portfolio of trustworthy AI technologies like datasets, AI models, applications, which integrated into an open source toolset. Key components of this toolset include an AI data pipeline with automatic computing capabilities, an autonomic manager based on MAPE-K models, that supports Human In The Loop, as well as several AI systems based on continuous self-optimiσation, self-configuration, self-healing and self-protection.

Launched in May 2022 with 14 partners from 6 different conuntries, the 3-year project concluded in April 2025. An online Final Review will be conducted in the summer of 2025, highlighting its significant achievents during the project’s lifespan.

ADAPT AI-Powered Anomaly Detection – Steel Use Case

CORE IC developed ADAPT – Active Detection and Anomaly Processing with smart Thresholds - a cutting-edge anomaly detection system designed to enhance operational oversight in steel manufacturing, through state-of-the-art machine learning and automation.

Powered by a Conditional Variational Autoencoder (cVAE), ADAPT continuously monitors process data—including scrap input, in-process metrics, and product compositions—to identify deviations and present them to process experts.

The base model was trained in an unsupervised manner on large volumes of historical, unlabeled production data, an ideal approach for industrial environments where labeled anomalies are rare and difficult to obtain. A Bayesian optimisation framework was integrated into the training pipeline, to allow data-driven hyperparameter tuning. Finally, an explainability module was introduced to the inference for transparency and user trust: for each detected anomaly, ADAPT highlights the top contributing features, enabling experts to quickly understand root causes and take informed corrective action.

ADAPT’s strength lies in its ability to continuously evolve. It supports two complementary mechanisms for refining the base model:

semi-supervised learning loop, which incorporates expert feedback on the identified anomalies, through a Human-in-the-Loop (HITL) workflow. ADAPT’s active learning framework minimises the need for constant human intervention, while still leveraging expert input where it adds the most value—driving continuous operational decision support.

unsupervised adaptation strategy on new, unseen data that addresses both data drift and concept drift over time.

These fine-tuning processes, along with performance monitoring, model redeployment, and user feedback tracking, are fully automated within ADAPT’s MLOps pipeline. The system also logs and manages historical anomaly data, for tracking patterns, comparing model behavior over time, and supporting process audits or improvement initiatives. Experiment tracking, version control, and metadata management ensure that every model iteration is traceable, reproducible, and aligned with production requirements.

ADAPT End-to-end pipeline for robust, adaptive Anomaly Detection

Data Augmentation Techniques – Asphalt Use case

Software developments in the Asphalt Use Case (UC) faced a key limitation: the scarcity of high-quality laboratory test data. Although the data spans long operational periods, the overall volume remains low, making it difficult to train effective AI models for analysing asphalt behaviour and predicting performance outcomes. This lack of data particularly impacts supervised learning approaches, leading to class imbalance, reduced model robustness, and constrained generalisation. To address this challenge CORE IC developed a three-stage data augmentation pipeline, illustrated below, that expanded the original dataset (~500 rows) to approximately 103.

Three stages of our Data Augmentation Technique

The first stage focused on imputing missing values using the K-Nearest Neighbors (KNN) algorithm, selecting the five closest data points to estimate missing entries. In the second stage, Gaussian noise was added to the dataset to introduce variability and promote model robustness, while preserving the data's underlying structure. The final stage involved experimentation with three generative AI models—Variational Autoencoder (VAE), Denoising Autoencoder (DAE), and ReaLTabFormer—each used to generate synthetic records that enriched the dataset. These enhanced datasets were then evaluated for their impact on predictive performance. Among them, the dataset generated by the VAE method emerged as the most effective, significantly enhancing the model’s accuracy and predictive performance.

Dissemination, Communication and Exploitation Activities

We are just a few days away since the project wrapped up and cannot omit reflecting back on some valuable dissemination and communication (D&C) achievements. Over the past 3-years, CORE IC devised and led the dissemination and communication strategy, working hand-in-hand with the entire consortium to maximise the project's visibility and impact.

The s-X-AIPI team participated in 24 high-impact events presenting their findings and organised the “Transforming Process Industries with AI” project-dedicated concluding event on 9 April, 2025 in Belgrade. These events offered remarkable opportunities for an extensive audience reach across significant target groups worldwide including professionals from research and academia, industry, IT, software, and technology, business consulting, EU institutions, national/regional, and local authorities, as well as policy making, investors and financial stakeholders, specific end user communities, association representatives and the general public and media.

Beyond that, consortium members generated 8 open-access scientific articles (6 already published and 2 currently in the publication process), an important legacy of the project the full list of which can be found on the project website or the ZENODO repository. The AI4SAM Cluster was also formed with two more EU funded projects – AIDEAS and Circular TwAIn - to expand the project’s impact beyond individual efforts. This cross-project collaboration ranged from joint event participations in major conferences, targeted webinars and the s-X-AIPI final event to collaboration on digital communication activities to amplify each project’s outreach.

The s-X-AIPI website, designed and maintained by CORE IC, will continue as a central hub for useful information and resources. On the site, visitors can learn more about the project’s final results, important research activities performed and landmark research news of each project phase through 7 press-releases, 9 newsletter issues, videos, open-access scientific papers, public deliverables and training courses.

Additional strategic digital communication efforts included the creation of 13 videos - all available on YouTube - to convey the s-X-AIPI concept and remarkable achievements in a more engaging manner than text-based content. The project also shaped valuable online communities on LinkedInX, significantly expanding its reach, another reflection of the overall effectiveness of the D&C strategy.

For the Exploitable Results (ERs) that are closer to the market, CORE IC used its’ Profit Simulation Tool (PST) to forecast the financial revenues during the post-project commercialisation period. CORE’s PST offers a structured approach to support commercialisation planning by combining strategic business insights with market data. The financial forecasts provided by the PST were integrated effectively into the project’s exploitation strategy.

Further support from the CORE IC team

SIDENOR, a steel making facility in Spain, worked in s-X-AIPI with a focus on optimising scrap usage, especially addressing challenges from contaminants such as copper commonly found in lower-quality external scrap. Their main goal is to produce high-quality steel, prevent downstream surface defects, and minimise energy consumption in the Electric Arc Furnace (EAF) melting process. CORE IC contributed to this effort by supporting partners BFI and MSI through the development of anomaly detection software, as part of the overall solution.

Additionally, CORE IC was involved in the Asphalt use case. The aim of this use case was to target circularity of the value chain, from quarry to road, by enhancing quality control of feedstock (aggregates, bitumen, recycled asphalt), improving the overall sustainability of the production process (including asphalt paving) and the quality of final product (asphalt mix). Partners CARTIF, DEUSER, and EIFFAGE, also working on this use case, leveraged the augmented data generated by CORE IC to enhance model performance.

CORE Group at the 2025 International Smart Factory Summit

Author: Alexandros Patrikios

19th June 2025

The 6th International Smart Factory Summit took place earlier this month, bringing together smart factory innovators from all over the world to explore the future of smart manufacturing.

During the Summit, Dr. Nikos Kyriakoulis, CORE Group Co-Founder and Managing Partner, participated in a pitching session for the Greek Smart Factory, our CORE initiative facilitated through the Twin4Twin project.

The Summit

Hosted by our Twin4Twin partners Swiss Smart Factory (SSF), the Summit has served as a global platform and an annual gathering for the smart factory community to discuss how these ecosystems can transform industrial operations.

Under the theme “Deep Tech Smart Factory – Uniting Humans, AI, Robots & Processes”, ISFS25 focused on the economic and societal impacts of emerging deep technologies and their role in reshaping manufacturing. The three-day event was geared toward international decision-makers from both the public and private sectors, and brought together a global community of experts from Europe, Asia, Africa, South and North America.

The Greek Smart Factory pitching session

Dr. Nikos Kyriakoulis took the stage to present the Greek Smart Factory, a test and demo platform that brings together manufacturers, tech providers, and academia to drive innovation in real-world industrial settings. During his talk, he presented the strengths of the GSF, as well as key steps forward towards the bigger picture of bringing the initiative to life.

If you would like to know more about the GSF initiative or express your interest, you can fill the form available here and our team will reach out.

Stefanos Kokkorikos, CORE Group Co-Founder and Managing Partner, was also in attendance. Stefanos Kokkorikos is also the Project Coordinator for the Twin4Twin Project, an EU Horizon Widening project and a big milestone for CORE Group and CORE IC. You can find more information on the Twin4Twin project website.

Our warmest thanks to the Swiss Smart Factory for the ongoing collaboration.

Optimising Battery Performance and RUL with CE-DSS

Author: Christina Vlassi

June 3rd 2025

Our tech team designed a Decision Support System (DSS) solution that enhances battery usage decision making, paired with an innovative Remaining Useful Life (RUL) estimation module, designed for smarter analysis and long-term optimisation.

We developed the solution as part of the DaCapo project, for end-user Fairphone. DaCapo aims to create human-centric digital tools and services which improve the adoption of Circular Economy (CE) strategies throughout manufacturing value chains and product lifecycles. The project has been ongoing for 2 and a half years and comprises 15 partners across 10 countries with a budget of 5.99 million euros.

Fairphone is a key DaCapo partner, founded in 2013 to address the “make-use-dispose” trend through its focus on modular smartphones that are durable and easy to repair.

How our solution works

Our aim was to develop a DSS that enhances decision-making around battery usage, through its pairing with a RUL estimation module – all of which is accessible to users through a dedicated web app. When users access the app, they get instant access to a battery report preview for all their devices.

From there, the system splits into its two core functionalities: RUL Estimation and Forecasting & Suggestions.

Remaining Useful Life Estimation

In this module, users can see battery capacity loss based on mathematical models that map out capacity degradation over time. These insights reveal how much useful life remains in the battery — giving users a clear view of their device’s condition.

Where the DSS shines is through the provision of personalised guidance. By analysing app usage patterns, the system identifies the impact of each app on battery degradation. Through data analysis, we offer custom recommendations to help users understand and adjust usage patterns that are draining their battery life faster than necessary.

Forecasting & Suggestions

Harnessing the power of machine learning, the system predicts RUL 10 days ahead — tailored specifically to the user’s habits. With these predictions set, the web app goes on to offer actionable tips for longer battery life, with suggestions on the temperature control, charging patterns and usage patterns. When users follow these tips, the RUL of their device is improved, which is reflected visually on the app, showing the tangible benefits of informed action and encouraging sustainability-oriented behaviour.

Towards a Greener Future

By helping users extend the battery life of their devices, our system supports circular economy principles, reducing electronic waste and promoting sustainability. Users are empowered to optimise their battery usage, minimise capacity loss and make smarter, eco-conscious choices – one device at a time.

Multi‑Level Communication and Computation Middleware in MODUL4R

Author: Maria Tassi

May 30th 2025

The MODUL4R project is transforming industrial manufacturing by creating adaptable, resilient, and reconfigurable production systems. The backbone for this transformation is a distributed control framework for modular "Plug & Produce" (PnP) systems, the development of which was successfully completed in April 2025.

CORE IC led these research activities and developed the Multi‑level Communication and Computation Middleware (MCCM) - a fundamental innovation in MODUL4R - that bridges the gap between Cyber‑Physical Systems (CPS) and modular data exchange frameworks, allowing for smooth interoperability across edge, fog, and cloud layers.

The Role of MCCM in Modular Manufacturing

The MCCM was developed to tackle the challenges of modern manufacturing where agility, real‑time decision‑making, and scalability are essential, optimizing industrial operations through dynamic task distribution. By integrating edge, fog, and cloud computing, the MCCM ensures efficient data processing, low latency communication, and dynamic resource allocation. Its architecture is in line with the RAMI 4.0 reference model, supporting Industry 4.0 standards and enabling vendor‑agnostic communication.

Some of the key features of MCCM include:

Hybrid Computing Platform

Edge Layer: Serves as the entry point to the asset layer, ensuring low latency communication and direct interaction with physical systems, enabling real‑time interaction with shopfloor devices.

Fog Layer: Acts as an intermediary for intensive computation, edge orchestration, and near real‑time execution, minimising the latency for critical decision making while reducing cloud dependency.

Cloud Layer: Provides security, scalability, and robustness while enabling external communication with third‑party services.

Dynamic Reconfiguration

Enables real‑time adjustments to workflows, ensuring adaptability to changing production demands.

Supports Infrastructure‑as‑a‑Service (IaaS), allowing third‑party applications to be deployed via containerization (e.g., using Kubernetes).

Service‑Oriented Architecture

Facilitates modular deployment of microservices, ensuring flexibility and scalability.

Uses MQTT, REST APIs or seamless data exchange between layers.

Orchestration Across Layers

The Orchestration Controller offers dynamic service allocation, across different levels, to optimize resource usage across different levels optimizing resource usage.

Enables multi‑cluster management, ensuring efficient workload distribution.

Orchestration within the MODUL4R hybrid computation environment

In more detail, MCCM establishes a service‑oriented, hybrid computation platform designed to orchestrate communication and computation within Cyber‑Physical Systems of Systems (CPSoS) networks. On the shopfloor, data generated by individual CPS components is acquired through industrial communication protocols such as OPC‑UA at the edge layer. This data is then transmitted via MQTT message brokers to corresponding MODUL4R services, where it is processed.

These services, along with the brokers, are typically deployed across the fog and cloud layers, depending on latency requirements and computational needs. MCCM facilitates seamless deployment of services and brokers across the appropriate layers and physical locations, enabling modularity and adaptability. This architecture ensures that each system component can operate on the most suitable computational resource, enhancing efficiency, reducing latency, and supporting scalable system operation.

By enabling dynamic orchestration and real‑time reconfiguration, MCCM provides a flexible infrastructure that supports near real‑time production optimization. The fog layer plays a critical role in enabling low‑latency data processing and rapid decision‑making, essential for responsive manufacturing systems.

Furthermore, MCCM supports third‑party application deployment in an Infrastructure‑as‑a‑Service (IaaS) model using containerization technologies such as Docker and Kubernetes. This approach not only enhances scalability and flexibility but also allows for real‑time system updates and adjustments without production downtime. To maintain system integrity and continuity, MCCM incorporates version control and traceability mechanisms, allowing manufacturers to roll back or update services and algorithms as needed—ensuring both operational stability and adaptability.

Implementation in MODUL4R Use Cases

The MCCM has been successfully deployed across MODUL4R’s pilot cases, demonstrating its versatility and impact:

FFT Use Case: The Quality Check station transmits capacitor inspection data via MQTT to the fog layer, where it is processed and forwarded to the cloud for analytics.

SSF Use Case: A soldering production system uses the MCCM to synchronize data from multiple stations, ensuring real‑time quality monitoring and control.

EMO Use Case: A CNC milling machine streams sensor data through MCCM to the cloud for predictive maintenance analytics.

NECO Use Case: MCCM orchestrates robotic arm coordination and metrology data.

Benefits for the Industry

The MCCM delivers transformative advantages for manufacturers:

Reduced Latency: Fog computing minimizes delays for critical decision‑making.

Scalability: Containerized services allow easy expansion to meet production needs.

Interoperability: Standardized protocols such as MQTT ensure compatibility with legacy and modern systems.

Resilience: Dynamic reconfiguration enhances system robustness against disruptions.

Security: Frameworks and tools such as cloud‑message‑brokers (Kafka, MQTT) and GAIA‑X policies ensure secure data management & distribution, all the way from edge sensors to cloud services

Conclusions

The MCCM delivers transformative advantages for manufacturers, by improving responsiveness, scalability, interoperability, and robustness in production systems. Using fog computing, MCCM minimizes latency through localized data processing, allowing for quicker, real time decision‑making.

Its support for containerized services enables rapid reconfiguration and seamless deployment, making it simple to grow operations as needed. Standardised protocols such as MQTT and REST‑APIs enable interoperability with both legacy and modern systems, whereas dynamic reconfiguration capabilities improve system resiliency, allowing operations to respond easily to disturbances or changing production requirements.

The Multi‑level Communication & Computation Middleware is a key component of the MODUL4R project, allowing for seamless integration of distributed control systems, real‑time analytics, and modular manufacturing workflows. By connecting edge, fog, and cloud layers, the MCCM enables manufacturers to achieve flexible, efficient, and sustainable operations, paving the way for the factories of the future.

3D Simulation‑driven optimisation for smart manufacturing lines

The Swiss Smart Factory use‑case

Author: Pantelis Papachristou

May 26th 2025

The latest demo by CORE Group’s technical team showcases a groundbreaking approach to drive digital transformation of manufacturing lines, using 3D simulation‑driven optimisation.

In collaboration with the Swiss Smart Factory (SSF) in Switzerland and using software developed by Visual Components, our team created a demo that shows how 3D simulations and data analytics can address critical bottlenecks and enhance overall efficiency and productivity in complex manufacturing operations.

3D simulation‑driven optimisation for smarter manufacturing

3D simulation is a powerful approach that uses advanced simulation software to create detailed virtual 3D models of production systems. These realistic virtual representations (3D scenes) accurately mirror the real world, including not only the physical geometry of objects and structures but also their texture, colour, lighting, and other visual properties.

In the context of manufacturing, 3D simulations are used to replicate entire production lines, individual machines, and workflows in a virtual environment. This method enables teams to identify inefficiencies and bottlenecks in the production line, optimise workflows, and test improvements without interrupting real‑world operations and risking downtime or disruption to actual production.

Key Features of the demo

The 3D simulation‑driven demo was developed in collaboration with the SSF, using simulation software developed by Visual Components - partnerships which our team has secured through the Twin4Twin and Modul4r Horizon EU projects. The demo was initially showcased during CORE Innovation Days, Greece’s first Industry 4.0 conference organised and hosted by CORE Group, with a follow‑up demonstration at CORE Group’s Beyond Expo booth.

In our demo, we focused on the SSF’s production line for the F330 drone, which includes several automated and robotic‑enhanced stations, such as a 3D printing farm for the drone’s blades, assembly and packaging stations, and a warehouse. By analysing the flow of components and monitoring machine utilisation, we identified areas for improvement and tested various optimisation strategies to boost overall throughput and efficiency.

3D simulation of the production line: Using the Visual Components simulation software, a virtual model of SSF’s production line was developed, including individual workstations and the transition of components (e.g. through Automated Guided Vehicles). To obtain a clear view of the production line’s behavior and establish a baseline for its performance, virtual sensors were integrated in each station to measure its cycle time, utilisation and throughput over time.

Data‑driven analysis: Leveraging data from each station, provided the foundation for identifying bottlenecks and inefficiencies in SSF’s production line that slowed down production flow. Through a dedicated data analysis, we pinpointed the root causes of these bottlenecks, highlighting the problematic areas which are keen to potential improvements and refining.

Optimisation scenarios: Based on the data analysis results, we tested four targeted optimisation scenarios, to address the observed bottlenecks. For example, we introduced intermediate buffer storage systems, expanded the capacity of particular workstations and added extra stations. Running the 3D simulations on these different scenarios allowed us to compare the optimisation results with the baseline, quantifying the simulated improvements in terms of overall production rate. Interestingly, the results were not always straightforward, as adding extra stations can also create new bottlenecks elsewhere in the line, leading to a decrease in productivity. Such unexpected results underscore the need for simulations in complex manufacturing environments, where interactions between different workstations can have non‑linear and counterintuitive effects.

Final report: Finally, we created a report that juxtaposes the cost for implementing each optimisation scenario with the induced improvement in production rate. This analysis was crucial in understanding the trade‑offs between implementing these optimisation strategies and the tangible benefits they provided. Moreover, this report serves as the starting point for deriving a more detailed ROI analysis. By inserting specific financial metrics, such as production costs and profit margins, manufacturers can calculate the potential financial impact of each optimisation scenario.

The Future of Manufacturing: Smarter, Faster, More Efficient

Our demonstration underscored the potential of 3D simulation‑driven optimisation to revolutionise manufacturing processes. By combining simulations with data analytics and photorealistic 3D models, manufacturers can gain deep insights into their operations, experiment with different optimisation scenarios, and implement improvements without disrupting production.

This approach not only helps identify and solve bottlenecks but also enables manufacturers to make smarter, data‑driven decisions that lead to improved efficiency, reduced downtime, and increased productivity. In this case, manufacturers can test and refine their processes, ensuring that their production lines are always operating at peak performance.

As industries continue to embrace digitalisation, 3D simulation‑driven optimisation will play an increasingly important role in shaping the future of manufacturing.

The ability to simulate, analyse, and optimise processes before implementing changes – “testing before investing” – offers a significant competitive advantage, allowing manufacturers to stay ahead of the curve and continue innovating in an ever‑evolving market.

The DiG_IT project has reached its conclusion

Author: Valia Iliopoulou

6th May 2025

After 4 and a half years, the DiG_IT project, which aimed at the transition to the Sustainable Digital Mine of the Future, is concluded. The main goal of the project was to address the needs of the mining industry to move forward towards a sustainable use of resources while keeping people and environment at the forefront of their priorities.

To this end, our consortium built an Industrial Internet of Things platform (IIoTp) which collects data from the mining industry (from humans, machines, environment and market) and transforms them into knowledge and actions. The aim of our IIoTp was to improve worker health and safety, making operations more efficient and minimising the environmental impact of mining.

The CORE team had a broad role in the project and contributed to the development of key components of the platform.

Safety Toolbox: Biosignal Analytics and Anomaly Detection

CORE was responsible for designing two out of the three components of the Intelligent safety toolbox that provides insights and supports the prevention of hazardous situations for people’s health in the mining field. The first component is the Biosignal Analytics & Anomaly Detection agent that is part of the Safety monitoring system of the Decision Support System (DSS).

The Biosignal Analytics component is a cloud agent responsible for monitoring and detecting changes in the health state of the individuals that work inside the mines. The system utilises the biometrical data from the smart garments and pairs them with Anomaly Detection models that operate in real-time, to detect any possible alerting states in the health of the miners.

Safety Toolbox: Air-quality smart monitoring and forecasting

The second component of the safety toolbox is the Air-quality smart monitoring and Forecasting agent, that is part of the Environmental and Safety monitoring system of the DSS. The agent is responsible for providing predictions of the air quality KPIs, meaning forecasting how specific air-quality substances are going to progress in the future.

This is useful for safety reasons, like notifying that a section of the mine should be evacuated when a dangerous substance exceeds the accepted limits. The development of the agent is based on Multi-Variate Neural Networks that are trained from data gathered from sensors that are deployed inside the mines.

Examples of actual and forecasted values for NO2

Analysis of target variables per hour

Predictive Operation System I

One of our team’s primary roles was the development of a Predictive Operation System that utilises AI architectures. The system consisted of a forecasting agent that predicts the consumption of an individual asset. The knowledge of anticipated consumption is critical for planning of the field operations and optimising the industrial processes.

Our team relied on COREbeat, our end-to-end predictive maintenance platform, to assist Marini Marmi, a historic marble quarry in the north of italy, with the operation of one of their critical assets. COREbeat was installed on an electrical supplied milling machine utilized for cutting though marble cubes producing marble slices. During the project, the operators received critical warnings from COREbeat. CORE team investigated and indicated the origin of the fault to Marini operators, who decided to halt the machine's operation and placed it in maintenance mode, preventing further damage to the machine.

More information on how COREbeat assisted the staff at Marini can be found here.

Predictive Operation System II

CORE was also primarily responsible for a Predictive Maintenance system that utilises Machine Learning methods and techniques. The system was designed to focus on individual assets, enabling the assessment of their overall health and the prediction of their future states in real-time. The assets selected for predictive maintenance were paired with Anomaly Detection models, specifically designed for the asset type, to maintain input consistency between applications. The goal was to create alerts that can provide early warnings to users about possible failures of the operating equipment.

Anomaly scores and anomaly thresholds of the test set

Commercialisation phase

To ensure successful commercialization, CORE’s dedicated team developed an Innovation Strategy, focusing on clear value propositions and competition mapping. The main findings show that European Economy will need to multiply its production of critical raw materials, due to the increasing digitalisation of the economy and use of renewables. Therefore, AI solutions are obligatory, if mining industry companies want to optimise operations as well as offer environmental protection but also health and safety to their employees.

Additionally, CORE developed business models for two key innovations introduced by the project, the DiG_IT IoT Platform, for mining operations optimisation, online measurements and failure predictions, and the Dig_IT Smart Garment, for improved safety and reduced risk of accidents and injuries, the two innovations with the highest TRL exposed in real conditions.

Two white papers published by our Innovation team

Author: Ioannis Batas

March 20th 2025

At CORE Innovation Days, held earlier this year, our Innovation Department published two white papers: "Our Innovation Management Methodology for EC-funded Projects" and "Factory of the Future: What’s Happening, What’s Evolving, and What’s Next."

The two white papers were distributed to attendees, offering valuable insights into our innovation management methodology and the transformative potential of Industry 4.0.

White Paper #1: "Our Innovation Management Methodology for EC-funded Projects"

In the dynamic landscape of EU-funded research, turning innovative concepts into tangible solutions requires a strategic approach. In the first white paper, we present our Innovation Management methodology, which unfolds through a four-phase exploitation strategy designed to help researchers and consortium partners navigate the entire process. From identifying project Key Exploitable Results (KERs) to developing market roadmaps, our methodology ensures that results are protected through Intellectual Property Rights (IPR) and strategically positioned for market adoption.

Our Innovation Management methodology also features the CORE Exploitation Canvas, a tool we designed to streamline the market entry of KERs from EU-funded projects. The canvas guides teams through 10 key blocks: identifying partners and IP ownership, selecting IPR protection, analyzing the target market, addressing barriers, assessing broader impact, evaluating the State of the Art and Unique Selling Points, outlining exploitation routes, setting actions and milestones, and identifying costs and revenue streams. By simplifying complex innovation processes, the CORE Exploitation Canvas helps teams align outputs with market needs, accelerate adoption, and create sustainable impact.

You can download the white paper here.

White Paper #2: "Factory of the Future: What’s Happening, What’s Evolving, and What’s Next"

Industry 4.0 is revolutionising manufacturing, presenting both opportunities and challenges for companies. Our second paper explores the transformative potential of smart factories enabled by AI, IoT, and other advanced technologies. The global Industry 4.0 market is experiencing explosive growth, projected to reach €511 billion by 2032, driven by the shift towards scalable, automated, and interconnected production systems.

This white paper analyses key technologies shaping the future of manufacturing, from AI and IoT to robotics and additive manufacturing, while addressing the barriers companies should mitigate. It highlights critical issues like workforce upskilling, cybersecurity risks, and the integration of legacy systems, offering practical strategies to navigate these challenges. By bridging current capabilities with future possibilities, this paper serves as a guide for manufacturers looking to embrace digital transformation and secure long-term competitiveness in a rapidly evolving market.

Our insights for this second paper were further enriched by our involvement in leading the exploitation management activities of the MODUL4R and M4ESTRO projects.

You can download the white paper here.

White papers now available online

At CORE Group, we believe that knowledge sharing is essential for driving technological progress and empowering innovators to transform ideas into impactful solutions.

If you missed the event or want to explore further, both white papers are now accessible. Learn how CORE Innovation is shaping the future of research exploitation and innovation management.

For more information, don't hesitate to reach out to our Innovation Department.

FAIRE: Federated Artificial Intelligence for Remaining useful life Edge analytics

Revolutionising Industrial Operations with FAIRE: Federated AI for Predictive Maintenance

Author: Konstantina Tsioli, Pavlos Stavrou

February 20th 2025

At CORE Innovation Days in January, CORE unveiled a groundbreaking demonstration of FAIRE (Federated Artificial Intelligence for Remaining Useful Life Edge Analytics), a cutting-edge solution that combines AI, edge computing, and federated learning to address critical challenges in industrial operations.

This innovative approach not only enhances operational efficiency but also ensures data privacy and scalability, making it a game-changer for industries like manufacturing, energy, and pharmaceutical.

What is FAIRE

FAIRE is a ground-breaking solution based on the MODUL4R and RE4DY EU projects. FAIRE is a federated AI solution designed to optimise industrial processes by leveraging edge computing and federated learning.

It enables real-time data processing and predictive analytics, while keeping sensitive data secure and on-premise. FAIRE showcased how it can be applied to predictive maintenance for CNC machines, but its applications extend far beyond this use case.

Key FAIRE Features

Edge Computing: This solution utilises edge devices deployed directly on the shop floor to collect and process data locally. This reduces latency, minimises bandwidth usage, and ensures real-time insights without relying on constant cloud connectivity.

In the demo, two edge devices were connected to CNC machines, collecting data relevant to tool wear and predicting the Remaining Useful Life (RUL) of milling tools.

Remaining Useful Life (RUL): is a predictive tool that estimates the time left before a machine or component fails or requires maintenance, based on real-time data and historical performance patterns. In the context of FAIRE, the RUL model predicts tool wear in CNC machines, enabling proactive maintenance and reducing downtime while ensuring data privacy and security.

Federated Learning: FAIRE employs federated learning to enable collaborative intelligence across multiple machines or factories. Instead of sharing raw data, only model parameters (e.g., insights and updates) are sent to a central server, ensuring data privacy and compliance with regulations like GDPR. This approach allows machines to "learn" from each other, improving prediction accuracy and operational efficiency without compromising sensitive information.

Data Privacy and Security: By keeping data on-premise and sharing only model updates, FAIRE ensures that proprietary information remains secure. This is particularly important for industries with strict data protection requirements.

Scalability and Flexibility: FAIRE’s architecture is designed to scale effortlessly. As new machines or edge devices are added to the network, they can seamlessly integrate into the federated learning ecosystem, enhancing the system’s overall intelligence and resilience.

Predictive Maintenance for CNC Machines

The demonstration of FAIRE solution focuses on a real life application: predictive maintenance for CNC machines. Here’s how it worked:

Data Collection: Two edge devices were connected to two CNC machines, collecting real-time data on tool wear and machine performance using industrial protocols like OPC-UA and MQTT.

Local Processing: The edge devices preprocessed the data locally, running AI models to detect anomalies and predict RUL. Results were displayed on monitors, providing operators with actionable insights.

Federated Learning: Model updates from each edge device were aggregated to a central server to update the global model. The updated model was then sent back to the edge devices, enhancing their predictive accuracy.

Real-Time Insights: Operators then could monitor tool wear and RUL in real time, enabling proactive maintenance and reducing downtime.

The benefits of FAIRE

FAIRE offers numerous benefits for industrial operations:

Smarter Machines: Continuous learning and adaptation improve machine performance and operational efficiency.

Enhanced Data Privacy: Sensitive data remains on-premise, ensuring compliance with data protection regulations and/or requirements.

Cost Optimisation: Reduced data transmission and proactive maintenance minimise operational costs.

Collaborative Intelligence: Federated learning enables machines to learn from each other, improving model accuracy across the network.

Scalability: The solution can easily scale to include additional machines or factories, making it suitable for large industrial networks.

Application areas

While the demonstration of FAIRE solution involved an example of CNC machines, its capabilities extend to various industries:

Pharmaceutical: In a sector where protecting sensitive and production data is paramount, this solution safeguards data privacy and security.

Automotive: Enhance predictive maintenance for automotive production lines.

Aerospace: Improve the performance and reliability of aircraft components.

Energy and Smart Grids: Monitor and optimise power grid equipment like transformers and substations.

Mining: Optimise the operation of heavy machinery like excavators and drilling equipment.

FAIRE represents a significant leap forward in industrial AI, combining the power of edge computing and federated learning to deliver real-time insights, enhance data privacy, and optimise operations. By addressing critical challenges like unexpected downtime, inefficient data handling, and legacy equipment limitations, FAIRE empowers industries to achieve smarter, safer, and more efficient operations.

Solutions like FAIRE will play a critical role in shaping the future of industrial automation and data-driven decision-making.

Introducing Smart Data Management to Mining Operations

Authors: Konstantina Tsioli, Nikolaos Gevrekis, Konstantinos Plessas

February 13th 2025

The CORE Innovation Centre team has developed a key backend platform for the MASTERMINE project, which aspires to become the go-to ecosystem for mines that envision digitalisation, environmental sustainability, productivity monitoring and public acceptance.

A key module of the MASTERMINE project is Cybermine, which serves as the access point to the physical world managing the field data for all components, connecting the physical and digital world through technologies like IIoT, the cloud, edge computing and machine learning ensuring the smart mine design and predictive maintenance of equipment and vehicles.

Our team has developed a backend platform within the Cybermine module, which seamlessly integrates and manages data from various sources across the mining industry. As a smart data management system, it automates data collection, storage, and access, ensuring flexibility, scalability and efficiency in the use of data.

Here’s a look at the ingredients that make this platform innovative.

An overview of the back-end platform developed by the CORE IC team

Collecting Data from Different Sources

Everything begins with data sources, which can include sensors, devices, or systems in the mining industry that generate information. However, not all data is the same - different sources provide data in different formats, structures, and transmission methods. Some data is received in real-time, while other data arrives in scheduled batches. In some cases, end users may even need to manually upload files. The platform uses a multi-layered storage approach, enabling it to secure and organise data types like real-time updates, historical records, and large files. Tools such as S3 buckets, InfluxDB, and PostgreSQL ensure both speed and reliability.

Making the Data Available to Users

Once stored, the data needs to be easily accessible. This is where the Consumption Layer comes in. This layer allows users to retrieve any data they need, whether it’s raw data straight from the source, processed insights, real-time feeds, or historical records. Through this layer, users can access raw or processed data quickly and efficiently, tailored to their specific needs, such as real-time monitoring or historical analysis.

Innovation: Making Data Collection Smarter

Traditionally, integrating data from different devices and sensors required custom-built services for each type of data source, making the process slow and complicated. The platform developed by our team eliminates this challenge by offering an automated, intelligent system that dynamically adapts to any new data source. Consequently, the effort needed to integrate new data sources is significantly reduced.

Significance for the Mining Industry

Mining operations are known for their harsh conditions, making it challenging to collect and manage data effectively. A platform like the one developed by CORE IC is critical because it simplifies data integration and enables the seamless collection of data from diverse sources, even in environments where traditional methods struggle. Its robust architecture ensures data reliability and accessibility, even in remote or extreme locations.

The ability to interpret data ahead of time is crucial for mining operations, particularly regarding heavy machinery, where real-time insights can prevent breakdowns, enhance maintenance schedules, and ensure operational continuity. By transforming raw data into actionable insights, the platform empowers decision-makers — whether managers, operators, or engineers — to make informed decisions, improving safety, productivity, and efficiency. Ultimately, the platform supports innovation, sustainability, and operational resilience in the demanding context of the modern mining industry.

CORE Group participated in a focus group workshop at HALCOR

Author: Maria Tassi

November 13th 2024

A successful Focus Group Workshop was held on 31st of October 2024 at the HALCOR facilities, in Boeotia, Greece as part of the CARDIMED project.

Participants in the workshop included representatives from CORE Group, ICCS, NTUA, HALCOR employees and managers, as well as regional stakeholders, enabling collaboration and knowledge exchange.

The CARDIMED project

CARDIMED is a project funded by the Horizon Europe Programme focused on boosting Mediterranean climate resilience through widespread adoption of Nature-based Solutions (NBS) across regions and communities.

Our CORE team will develop a cloud-based orchestration middleware for efficient data handling across diverse sources, and also focus our efforts on industrial symbiosis through smart water management in the HALCOR demo site, using digital twin technologies.

The workshop aimed at promoting innovative solutions in industrial manufacturing, conducted in the context of Digital Solutions creation that offer tailored views for visualising information to non-experts, citizens etc., with emphasis on the Demonstration case of Industrial symbiosis through smart water management.

Workshop goals and objectives

The main objective of the workshop was to engage end users to gather feedback and prioritise the requirements, and consequently translate the business requirements of end user, HALCOR, to technical requirements leading to implementation of digital solutions and bringing innovation to the industry.

The workshop was opened by M.Sc. Efstathia Ziata (HALCOR), who presented the CARDIMED project and its objectives. Following her presentation, Dr. Ioannis Meintanis (CORE IC) gave insights on the digital twin solution, which is a replica of a physical asset that simulates its behaviour in a virtual environment, highlighting its role in supporting Water-Industrial Symbiosis within HALCOR's factories.

Dr. Maria Tassi (CORE IC) presented other Digital Solutions implemented as part of the HALCOR demo, such as the Nature-Based Solutions (NBS) definition and scenario-based impact assessment interface, the climate resilience dashboards and data storytelling, the citizen engagement app and intervention content management and the NBS exploitation and transferability support module, highlighting their potential to enhance efficiency and sustainability in operations.

Notable contributors to the round table discussions included M.Sc. Katerina Karagiannopoulou (ICCS) and M.Sc. Nikolaos Gevrekis (CORE IC), who provided valuable perspectives on the digital solutions.

Impact on Industry

The success of the workshop lies in end users’ discussions on the various digital solutions, who provided valuable feedback and prioritised user requirements to be integrated in the Digital Twin solution. Their insights will be critical in shaping a final product that effectively addresses the evolving demands of the industry.

These innovations are set to significantly impact the manufacturing industry, by enhancing resource efficiency and sustainability. They will help optimise water usage and promote resource reuse across interconnected processes, leading to cost savings and reduced environmental footprints.

CORE Group’s collaboration with HALCOR

These technological advances will enable HALCOR to optimise its manufacturing processes and resource management in real time, resulting in improved operational efficiency, significant cost savings and reduced water consumption. By adopting sustainable practices, HALCOR can strengthen its reputation as an industry leader in sustainability and appeal to environmentally conscious stakeholders.

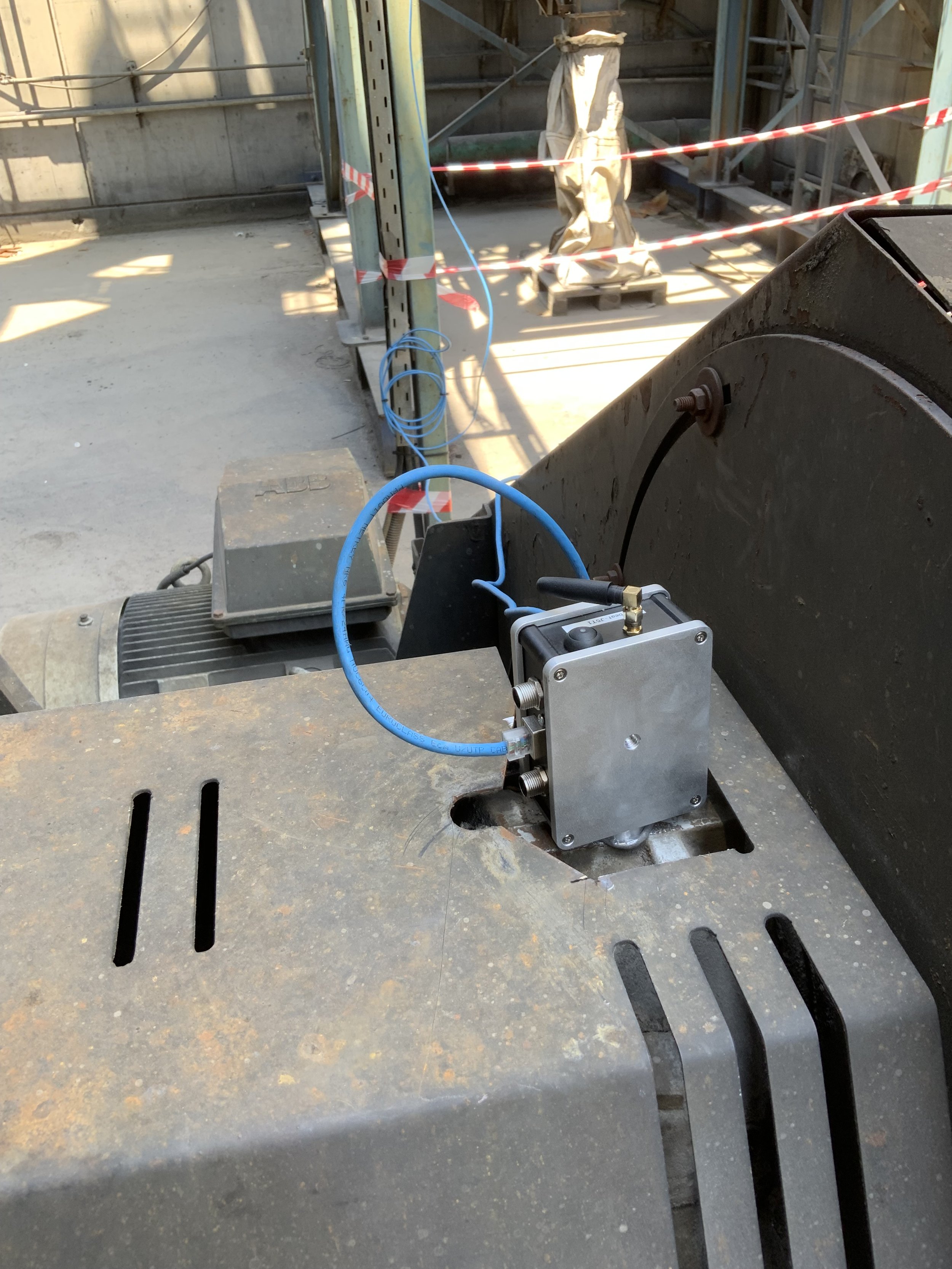

HALCOR is a strategic partner for CORE Group, with a collaboration extending across three more Horizon Europe projects, TRINEFLEX, StreamSTEP and THESEUS. As part of the TRINEFLEX project, HALCOR has integrated COREbeat, CORE Group’s all-in-one Predictive Maintenance Platform at its Copper Tubes Plant facility in Boeotia. COREbeat’s asset monitoring capabilities are helping HALCOR acquire deep monitoring insights and increase the availability, flexibility, efficiency and reliability of their equipment.

COREbeat, our all-in-one Predictive Maintenance solution, relies on the beatBox hardware component, pictured here.

The TEAMING.AI project reaches a successful conclusion

Authors: Maria Lentoudi, Ioannis Batas

26th September 2024

The TEAMING.AI project has officially wrapped up its activities, with a Final Review meeting held earlier this summer in Valencia, at the premises of Industrias Alegre. This meeting marked the culmination of 3.5 years of dedicated effort, showcasing the remarkable outcomes of this collaborative project.

Comprising a consortium of 15 partners from 8 countries, TEAMING.AI entered into force in January 2021 with the goal of increasing the sustainability of EU production with the help of Artificial Intelligence. The project has since yielded remarkable results, including more than 23 open-access publications.

Project overview

TEAMING.AI project’s aim was to make breakthroughs in smart manufacturing by introducing greater customisation and personalisation of products and services in AI technologies. Through a new human and AI teaming framework, the aim of our consortium was to optimise manufacturing processes, maximising the strengths of both the human and AI elements, while maintaining and re-examining safety and ethical compliance guidelines.

This was achieved through the development of an innovative operational framework, designed to cope with the heterogeneity of data types and the uncertainty and dynamic changes in the context of human-AI interaction with update dynamics more instantly than with pre-existing technologies.

Our CORE team led on the Dissemination and Exploitation Work Package, being involved in various tasks within the project framework to expand TEAMING.AI’s impact. More specifically, we led the project’s strategic management and replicability, as well as leading the dissemination and communication strategy.

Strategic Management & Replicability of TEAMING.AI

The CORE innovation team was responsible for the strategic management of the consortium, identifying Key Exploitable Results of the project and carrying out market analysis. Our work for this part of the project included:

Identifying Market Barriers: Our team conducted a market barriers analysis, based on input provided through a custom questionnaire. The project’s end users were surveyed, and the survey was also circulated to the ICT-38 2020 projects, increasing our end user sample. After completing the survey, we identified mitigation strategies for the barriers discussed.

Pains & Gains: We identified the most significant pains our end users face based on a unique research plan. The results of this part of our research were highly impactful, being included in Chapter 23 of the “Artificial Intelligence in Manufacturing” open access book. You can find out more here.

Value Propositions: Our team identified the value propositions offered throughout the project, through interactive workshops with our partners to help us align the identified jobs, pains, and gains with the Teaming.AI Engine result.

PESTLE Analysis: A PESTLE analysis was performed to describe Political, Economic, Social, Technological, Legal and Environmental factors that are related to Teaming.AI. Results show strong political presence to enable further scale-up activities of the project’s results. The uncertain economic conditions may influence investment decisions. The social factors indicate the need for more efficient activities and upskilling. The technologies are emerging and considered enablers according to Gartner. Finally, from an environmental point of view, results have remained a little stagnant according to the IPCC.

Market Replication & Analysis: As a final task, our team worked on Market Replication. The technology providers relevant to Teaming.AI were considered a possible segment for replication besides the project’s end users. A workshop was held with the project’s technology providers to determine requirements to address these segments.

Dissemination and Communication Activities

When it comes to dissemination and communication, the evaluation revealed a strongly positive outcome for our team’s strategy. CORE worked hand-in-hand with the entire consortium to maximize the project's impact and ensure the project’s objectives were communicated effectively to relevant audiences and stakeholders.

The project’s official website acted as its main communication hub, supported by a strong presence on social media platforms, the creation of various communication materials, including 11 videos throughout the duration of the project, the publication of 33 media articles, the release of 10 dedicated newsletter editions, and 11 press releases. These efforts were aimed at increasing the project’s visibility and public engagement.

TEAMING.AI consortium also produced 28 scientific peer-reviewed publications in top-ranked journals or conferences, attended 43 events delivering 38 presentations, promoted TEAMING.AI through 3 exhibit booths at key industry events, and organised one final conference. TEAMING.AI also joined the AI4MANUFACTURING Cluster and participated in 5 cluster workshops alongside 13 other H2020 and Horizon EU research projects to expand its impact.

A recording of the final workshop is available here for viewing on YouTube. Additionally, 10 more short videos introducing the TEAMING.AI concept and summarizing its research activities are accessible via the project’s YouTube channel.

The project’s website, designed and maintained by CORE, will continue as a central hub for useful information and resources. Visitors can learn more about important research activities performed and results through press-releases, newsletters, open-access scientific papers and public deliverables that can be found on the website.

The project has also shaped significant online communities, with more than 1.300 followers on LinkedIn and 900 on X.

The dissemination and communication activities have played a crucial role in ensuring that the TEAMING.AI project’s activities and results were effectively shared with both scientific and industry communities, as well as the general public.

It was great working with our TEAMING.AI consortium to deliver impactful change in human-AI interactions for the manufacturing sector. Looking forward to future collaborations.

Future-proofing a 120-year old marble quarry

COREbeat is digitally transforming Marini Marmi,

a historic marble quarry in the north of Italy.

Future-proofing a 120-year old marble quarry

Author: Alexandros Patrikios

July 5th 2024

Our sustainable future relies on longevity, which can be ensured through the meaningful restoration and modernisation of our historic past. That is the case for Marini Marmi, a historic stone transformation facility in the North of Italy, which has supplied material for a variety of big structures with significant heritage impact all over the World.

COREbeat is helping this historic facility modernise its legacy equipment and machinery, through the use of cutting-edge predictive maintenance algorithms.

The Dig_IT project

CORE Group’s collaboration with Marini Marmi stems from the Dig_IT project, a Horizon 2020-funded project which aims to address the needs of the mining industry, moving forward towards a sustainable use of resources while keeping people and environment at the forefront. The Marini use-case of the Dig_IT project aims to reduce unpredicted downtime contributing to overall productivity optimisation.

Where COREbeat comes in

COREbeat, CORE Group’s flagship predictive maintenance solution, promises to eliminate downtimes through the use of Deep Learning algorithms. Offering a 360-solution, COREbeat comprises compact hardware, AI-infused software and an intuitive web and mobile UI and has been applied to many production lines across Europe. Its capabilities present Marini Marmi with a pivotal next step in the digitalisation of their facilities.

At the Marini Marmi quarry, COREbeat has been installed in different assets of a large-scale marble-cutting engine. Installation took less than 2 hours, and Marini employees could immediately monitor the behavior of their gang saw. In less than 6 weeks, COREbeat’s predictive maintenance capabilities also became available.

When things break down

After the initial installation and training period, the quarry’s employees received a notification, informing them that one of the parts was in critical condition and required immediate attention. In just one week, the part was now in critical condition, with a COREbeat’s health score below 10% indicating that it is time for maintenance. The factory staff scheduled maintenance for the machine 4 days later, and the machine kept working for another 3 days, breaking down within the indicated timeframe. The results were highly positive for COREbeat, but the Marini Marmi staff were faced with delays that might have been prevented.

Find out more

COREbeat is still up and running at Marini Marmi through the Dig_IT project. If you’re interested in finding out more about Dig_IT, you can follow the project’s dedicated page on social media. For all the rest of our 40+ EU research projects, you can find more information on our dedicated webpage.

And if you’re impressed with COREbeat’s predictive maintenance capabilities, you can reach out to info@core-beat.com, and learn how our team can help you.

Transforming Greek manufacturing with COREbeat

CORE Group’s all-in-one predictive maintenance platform

is the go-to solution for EP.AL.ME., the leading Greek manufacturer.

Transforming Greek manufacturing with COREbeat

Author: Alexandros Patrikios

April 11th 2024

COREbeat, CORE Group’s signature product, is here to cover an inherent need of the manufacturing sector – the seamless operation of its production machines.

COREbeat is an all-in-one predictive maintenance solution, encompassing compact hardware, AI-infused software, and a web and mobile UI. COREbeat operates through collecting data using its built-in sensors in real-time, then employing machine learning algorithms to detect behavioural anomalies and and provide early notifications of upcoming failures.

The collaboration

Since last summer, COREbeat has been the driving force behind EP.AL.ME.’s predictive maintenance capabilities.

A subsidiary of MYTILINEOS, EP.AL.ME. is an Aluminium Recycling company that specializes in the processing and sorting of scrap metal and the production (smelting) of recycled Aluminium billets. Their collaboration with CORE Group started as part of the e-CODOMH cluster, whose mission is to upgrade entrepreneurship and create an added value in the Greek construction sector.

Installation

The initial installation of beatBox, COREbeat’s hardware component comprising IoT and Computing Edge devices, takes only 2 hours. Upon installation, employees can monitor the behaviour of their machine right away, through COREbeat’s intuitive User Interface. The predictive maintenance capabilities begin at 4 to 6 weeks, and that’s when COREbeat’s Deep Learning magic comes in at full force. After this initial training period, factory employees receive instant notifications through the app for any anomalies. This way, they know immediately whenever the operational health of a machine asset starts declining.

Below, we have some photos of the EP.AL.ME. installation by the COREbeat team.

How COREbeat helped EP.AL.ME.

EP.AL.ME. came to appreciate COREbeat’s predictive maintenance capabilities soon after installation. Maintenance employees at the facility were notified that one of the fans in the aluminum recycling facility was in critical condition, a few days after scheduled maintenance had already taken place. The factory staff, upon inspection, could not find the source of the malfunction, so they continued operating the fan normally.

In the COREbeat interface, the fan kept appearing to be in critical and worsening condition over the span of 2 weeks, which led the staff to pause its operation for an unscheduled maintenance check. During this check, they found an issue with the motor belt of the fan, which they would have missed without COREbeat. This saved the facility from unexpected downtime, delays in the factory’s production pipeline, and substantial losses due to production delays.

Find out more

COREbeat’s success relies on CORE Group’s long experience in the field of machine learning for manufacturing. With a long list of over 40 EU projects, CORE Group is turning into a household name in AI technologies and their industrial applications.

If you are interested in COREbeat’s predictive maintenance capabilities, you can reach out to our team and share more information on the needs of your manufacturing facility through info@core-beat.com.

The InComEss project wraps up

Authors: Clio Drimala, Dimitris Eleftheriou

19th March 2024

Having successfully completed 4 years of operations, the InComEss project officially wrapped up its activities last month, and held the project’s Final Review Meeting with the European Commission’s Project Officer on March 13, in Brussels, at the premises of SONACA.

With a core team of 18 partners from 10 countries, InComEss entered into force in March 2020. Now, after a four-year lifespan, the project has yielded remarkable results, including more than 17 open-access academic publications, and has driven outstanding research on the development of polymer-based smart materials with energy harvesting and storage capabilities in a cost-efficient manner for the widespread implementation of the Internet of Things (IoT).

CORE Group was involved in various tasks within the project framework to expand InComEss’s impact. In particular, we were responsible for devising and managing the consortium’s exploitation strategy, as well as leading the dissemination and communication strategy.

Project overview

Besides our involvement, overall achievements of the project include the development of:

Piezoelectric and thermoelectric energy harvesters with a proven ability to generate electricity through mechanical vibrations and temperature differences.

Monolithic printed supercapacitors that demonstrated their efficacy to store the harvested energy when integrated with a conditioner circuit and generators.

A power conditioning circuit that enhances energy transfer efficiency between generators and end-use electronics.

A miniaturized Fibre Optic Sensors (FOS) interrogator, with reduced power consumption, was showcased for its utility in energy harvesting.

Furthermore, Bluetooth Wireless MEMS and FOS communications were optimized and seamlessly integrated into an IoT platform, offering data monitoring capabilities. Among the research highlights being implemented within InComEss are also three impactful use-cases within the aeronautic, automotive, and smart buildings sectors.

Exploitation activities